Publications – Journals

Bounds on mutual information of mixture data for classification tasks

To quantify the optimum performance for classification tasks, the Shannon mutual information is a natural information-theoretic metric, as it is directly related to the probability of error. The data produced by many imaging systems can be modeled by mixture distributions. The mutual information between mixture data and the class label does not have an analytical expression nor any efficient computational algorithms. We introduce a variational upper bound, a lower bound, and three approximations, all employing pair-wise divergences between mixture components. We compare the new bounds and approximations with Monte Carlo stochastic sampling and bounds derived from entropy bounds. To conclude, we evaluate the performance of the bounds and approximations through numerical simulations.

For details, see Yijun Ding and Amit Ashok, J. Opt. Soc. Am. A 39, 1160-1171 (2022)

Invertibility of multi-energy X-ray transform

The goal is to provide a sufficient condition for the invertibility of a multi-energy (ME) X-ray transform. The energy-dependent X-ray attenuation profiles can be represented by a set of coefficients using the Alvarez-Macovski (AM) method. An ME X-ray transform is a mapping from AM coefficients to noise-free energy-weighted measurements, where N≥2 .

For details, see Yijun Ding, Eric W Clarkson, and Amit Ashok, Med Phys. 2021 Oct;48(10):5959-5973. doi: 10.1002/mp.15168. Epub 2021 Aug 26. PMID: 34390587; PMCID: PMC8568641.

Visibility of quantization errors in reversible JPEG2000

Image compression systems that exploit the properties of the human visual system have been studied extensively over the past few decades. For the JPEG2000 image compression standard, all previous methods that aim to optimize perceptual quality have considered the irreversible pipeline of the standard. In this work, we propose an approach for the reversible pipeline of the JPEG2000 standard. We introduce a new methodology to measure visibility of quantization errors when reversible color and wavelet transforms are employed. Incorporation of the visibility thresholds using this methodology into a JPEG2000 encoder enables creation of scalable codestreams that can provide both near-threshold and numerically lossless representations, which is desirable in applications where restoration of original image samples is required. Most importantly, this is the first work that quantifies the bitrate penalty incurred by the reversible transforms in near-threshold image compression compared to the irreversible transforms.

For details, see Feng Liu, Eze Ahanonu, Michael W. Marcellin, Yuzhang Lin, Amit Ashok, and Ali Bilgin, Signal Processing: Image Communication Volume 84, May 2020, 115812

Approaching quantum-limited imaging resolution without prior knowledge of the object location

Passive imaging receivers that demultiplex an incoherent optical field into a set of orthogonal spatial modes prior to detection can surpass canonical diffraction limits on spatial resolution. However, these mode-sorting receivers exhibit sensitivity to contextual nuisance parameters (e.g., the centroid of a clustered or extended object), raising questions on their viability in realistic scenarios where prior information about the scene is limited. We propose a multistage detection strategy that segments the total recording time between different physical measurements to build up the required prior information for near quantum-optimal imaging performance at sub-Rayleigh length scales. We show, via Monte Carlo simulations, that an adaptive two-stage scheme that dynamically allocates recording time between a conventional direct detection measurement and a binary mode sorter outperforms idealized direct detection alone when no prior knowledge of the object centroid is available, achieving one to two orders of magnitude improvement in mean squared error for simple estimation tasks. Our scheme can be generalized for more sophisticated tasks involving multiple parameters and/or minimal prior information.

For details, see Michael R. Grace, Zachary Dutton, Amit Ashok, and Saikat Guha, J. Opt. Soc. Am. A 37, 1288-1299 (2020)

Attaining the quantum limit of superresolution in imaging an object’s length via predetection spatial-mode sorting

We consider estimating the length of an incoherently radiating quasimonochromatic extended object of length much smaller than the traditional diffraction limit, the Rayleigh length. This is the simplest abstraction of the problems of estimating the diameter of a star in astronomical imaging or the dimensions of a cellular feature in biological imaging. We find, as expected by the Rayleigh criterion, that the Fisher information (FI) of the object’s length, per integrated photon, vanishes in the limit of small sub-Rayleigh length for an ideal image-plane direct-detection receiver. With an image-plane Hermite-Gaussian (HG) mode sorter followed by direct detection, we show that this normalized FI does not diminish with decreasing object length. The FI per photon of both detection strategies gradually decreases as the object length greatly exceeds the Rayleigh limit, due to the relative inefficiency of information provided by photons emanating from near the center of the object about its length. We evaluate the quantum Fisher information per unit integrated photon and find that the HG mode sorter exactly achieves this limit at all values of the object length. Further, a simple binary mode sorter maintains the advantage of the full mode sorter at highly sub-Rayleigh lengths. In addition to this FI analysis, we quantify improvement in terms of the actual mean-square error of the length estimate using predetection mode sorting. We consider the effect of imperfect mode sorting and show that the performance improvement over direct detection is robust over a range of sub-Rayleigh lengths.

For details, see Zachary Dutton, Ronan Kerviche, Amit Ashok, and Saikat Guha, American Physical Society in Physical Review A, 99(3) pp. 33847-33854, 2019.

Pathological image compression for big data image analysis: Application to hotspot detection in breast cancer

In this paper, we propose a pathological image compression framework to address the needs of Big Data image analysis in digital pathology. Big Data image analytics require analysis of large databases of high-resolution images using distributed storage and computing resources along with transmission of large amounts of data between the storage and computing nodes that can create a major processing bottleneck. The proposed image compression framework is based on the JPEG2000 Interactive Protocol and aims to minimize the amount of data transfer between the storage and computing nodes as well as to considerably reduce the computational demands of the decompression engine. The proposed framework was integrated into hotspot detection from images of breast biopsies, yielding considerable reduction of data and computing requirements.

For details, see Khalid K. Niazi, Yuzhang Lin, Feng Liu, Amit Ashok, Michael W. Marcellin, G. Tozbikian, Metin N. Gurcan, and Ali Bilgin, Artificial Intelligence in Medicine, 95 pp. 82-87, 2019.

Eavesdropping of display devices by measurement of polarized reflected light

Display devices, or displays, such as those utilized extensively in cell phones, computer monitors, televisions, instrument panels, and electronic signs, are polarized light sources. Most displays are designed for direct viewing by human eyes, but polarization imaging of reflected light from a display can also provide valuable information. These indirect (reflected/scattered) photons, which are often not in direct field-of-view and mixed with photons from the ambient light, can be extracted to infer information about the content on the display devices. In this work, we apply Stokes algebra and Mueller calculus with the edge overlap technique to the problem of extracting indirect photons reflected/scattered from displays. Our method applies to recovering information from linearly and elliptically polarized displays that are reflected by transmissive surfaces, such as glass, and semi-diffuse opaque surfaces, such as marble tiles and wood furniture. The technique can further be improved by applying Wiener filtering.

For details, see Yitian Ding, Ronan Kerviche, Amit Ashok, and Stanley Pau, Applied Optics, 57, pp. 5483-5491, 2018.

Convolutional Neural Networks for Non-iterative Reconstruction of Compressively Sensed Images

Traditional algorithms for compressive sensing recovery are computationally expensive and are ineffective at low measurement rates. In this paper, we propose a data-driven noniterative algorithm to overcome the shortcomings of earlier iterative algorithms. Our solution, ReconNet , is a deep neural network, which is learned end-to-end to map block-wise compressive measurements of the scene to the desired image blocks. Reconstruction of an image becomes a simple forward pass through the network and can be done in real time. We show empirically that our algorithm yields reconstructions with higher peak signal-to-noise ratios (PSNRs) compared to iterative algorithms at low measurement rates and in presence of measurement noise. We also propose a variant of ReconNet, which uses adversarial loss in order to further improve reconstruction quality. We discuss how adding a fully connected layer to the existing ReconNet architecture allows for jointly learning the measurement matrix and the reconstruction algorithm in a single network. Experiments on real data obtained from a block compressive imager show that our networks are robust to unseen sensor noise.

For details, see Suhas Lohit, Kuldeep Kulkarni, Ronan Kerviche, Pavan Turaga and Amit Ashok, IEEE Transactions on Computational Imaging, 4(3) pp. 326-340, 2018.

Real-time robust direct and indirect photon separation with polarization imaging

Separation of reflections, such as superimposed scenes behind and in front of a glass window and semi-diffuse surfaces, permits the imaging of objects that are not in direct line of sight and field of view. Existing separation techniques are often computational intensive, time consuming, and not easily applicable to real-time, outdoor situations. In this work, we apply Stokes algebra and Mueller calculus formulae with a novel edge-based correlation technique to the problem of separating reflections in the visible, near infrared, and long wave infrared wavelengths. Our method exploits spectral information and patch-wise operation for improved robustness and can be applied to optically smooth reflecting and partially transmitting surfaces such as glass and optically semi-diffuse surfaces such as floors, glossy paper, and white painted walls. We demonstrate robust image separation in a variety of indoor and outdoor scenes and real-time acquisition using a division-of-focal plane polarization camera.

For details, see Yitian Ding, Amit Ashok, and Stanley Pau, Optics Express, 23 (25), pp. 29432-29453, 2017.

Face recognition with non-greedy information-optimal adaptive compressive imaging

Adaptive compressive measurements can offer significant system performance advantages due to online learning over non-adaptive or static compressive measurements for a variety of applications, such as image formation and target identification. However, such adaptive measurements tend to be sub-optimal due to their greedy design. Here, we propose a non-greedy adaptive compressive measurement design framework and analyze its performance for a face recognition task. While a greedy adaptive design aims to optimize the system performance on the next immediate measurement, a non-greedy adaptive design goes beyond that by strategically maximizing the system performance over all future measurements. Our non-greedy adaptive design pursues a joint optimization of measurement design and photon allocation within a rigorous information-theoretic framework. For a face recognition task, simulation studies demonstrate that the proposed non-greedy adaptive design achieves a nearly two to three fold lower probability of misclassification relative to the greedy adaptive and static designs. The simulation results are validated experimentally on a compressive optical imager testbed.

For details, see Liang C. Huang, Mark A. Neifeld, Amit Ashok, Applied Optics, 55(34), pp.9744-9755, 2016.

ReconNet: Non-Iterative Reconstruction of Images from Compressively Sensed Random Measurements

The goal of this paper is to present a non-iterative and more importantly an extremely fast algorithm to reconstruct images from compressively sensed (CS) random measurements. To this end, we propose a novel convolutional neural network (CNN) architecture which takes in CS measurements of an image as input and outputs an intermediate reconstruction. We call this network, ReconNet. The intermediate reconstruction is fed into an off-the-shelf denoiser to obtain the final reconstructed image. On a standard dataset of images we show significant improvements in reconstruction results (both in terms of PSNR and time complexity) over state-of-the-art iterative CS reconstruction algorithms at various measurement rates. Further, through qualitative experiments on real data collected using our block single pixel camera (SPC), we show that our network is highly robust to sensor noise and can recover visually better quality images than competitive algorithms at extremely low sensing rates of 0.1 and 0.04. To demonstrate that our algorithm can recover semantically informative images even at a low measurement rate of 0.01, we present a very robust proof of concept real-time visual tracking application.

For details, see Kuldeep Kulkarni, Suhas Lohit, Pavan K. Turaga, Ronan Kerviche, Amit Ashok, In Press IEEE Computer Vision and Pattern Recognition (CVPR) (2016). arXiv:1601.06892v2.

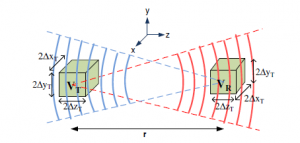

Capacity of electromagnetic communication modes in a noise-limited optical system

Presents capacity bounds of an optical system that communicates using electromagnetic waves between a transmitter and a receiver. The bounds are investigated in conjunction with a rigorous theory of degrees of freedom (DOF) in the presence of noise. By taking into account the different signal-to-noise ratio (SNR) levels, an optimal number of DOF that provides the maximum capacity is defined. We find that for moderate noise levels, the DOF estimate of the number of active modes is approximately equal to the optimum number of channels obtained by a more rigorous water-filling procedure. On the other hand, for very low- or high-SNR regions, the maximum capacity can be obtained using less or more channels compared to the number of communicating modes given by the DOF theory. In general, the capacity is shown to increase with increasing size of the transmitting and receiving volumes, whereas it decreases with an increase in the separation between volumes. Under the practical channel constraints of noise and finite available power, the capacity upper bound can be estimated by the well known iterative water-filling solution to determine the optimal power allocation into the subchannels corresponding to the set of singular values when channel state information is known at the transmitter.

Illustration of the Communication Mode between Rectangular Transmitting and Receiving Volumes.

For details, see Myungjun Lee, Mark A. Neifeld, Amit Ashok, Applied Optics, 55(6), pp. 1333-1342 (2016). DOI: 10.1364/AO.55.001333

Radar target profiling and recognition based on TSI-optimized compressive sensing kernel

The design of wideband radar systems is often limited by existing analog-to-digital (A/D) converter technology. State-of-the-art A/D rates and high effective number of bits result in rapidly increasing cost and power consumption for the radar system. Therefore, it is useful to consider compressive sensing methods that enable reduced sampling rate, and in many applications, prior knowledge of signals of interest can be learned from training data and used to design better compressive measurement kernels. In this paper, we use a task-specific information-based approach to optimizing sensing kernels for high-resolution radar range profiling of man-made targets. We employ a Gaussian mixture (GM) model for the targets and use a Taylor series expansion of the logarithm of the GM probability distribution to enable a closed-form gradient of information with respect to the sensing kernel. The GM model admits nuisance parameters such as target pose angle and range translation. The gradient is then used in a gradient-based approach to search for the optimal sensing kernel. In numerical simulations, we compare the performance of the proposed sensing kernel design to random projections and to lower-bandwidth waveforms that can be sampled at the Nyquist rate. Simulation results demonstrate that the proposed technique for sensing kernel design can significantly improve performance.

For details, see Yujie Gu, Nathan Goodman, Amit Ashok, IEEE Transactions on Signal Processing, 62(12), pp. 3194-3207 (2014). DOI: 10.1109/TSP.2014.2323022

Cellular imaging of deep organ using two-photon Bessel light-sheet nonlinear structured illumination microscopy

In vivo fluorescent cellular imaging of deep internal organs is highly challenging, because the excitation needs to penetrate through strong scattering tissue and the emission signal is degraded significantly by photon diffusion induced by tissue-scattering. We report that by combining two-photon Bessel light-sheet microscopy with nonlinear structured illumination microscopy (SIM), live samples up to 600 microns wide can be imaged by light-sheet microscopy with 500 microns penetration depth, and diffused background in deep tissue light-sheet imaging can be reduced to obtain clear images at cellular resolution in depth beyond 200 microns. We demonstrate in vivo two-color imaging of pronephric glomeruli and vasculature of zebrafish kidney, whose cellular structures located at the center of the fish body are revealed in high clarity by two-color two-photon Bessel light-sheet SIM.

3D image of kidney cellular structure of a live double transgenic zebrafish larva showing endothelial cells in the vasculature (GFP, green) and podocytes (mCherry, red). Images were acquired with two-photon Bessel light-sheet nonlinear structured illumination microscopy.

For details, see M. Zhao, et al., Biomed. Opt. Express 5, 1296-1308 (2014).

Image compression based on task-specific information

Compression is a key component in imaging systems hat have limited power, bandwidth, or other resources. In many applications, images are acquired to support a specific task such as target detection or classification. However, standard image compression techniques such as JPEG or JPEG2000 (J2K) are often designed to maximize image quality as measured by conventional quality metrics such as mean-squared error (MSE) or Peak Signal to Noise Ratio (PSNR). This mismatch between image quality metrics and ask performance motivates our investigation of image compression using a task-specific metric designed for the designated tasks. Given the selected target detection task, we first propose a metric based on conditional class entropy. The proposed metric is then incorporated into a J2K encoder to create compressed codestreams that are fully compliant with the J2K standard. Experimental results illustrate that the decompressed images obtained using the proposed encoder greatly improve performance in detection/classification tasks over images encoded using a conventional J2K encoder.

For details, see Lingling Pu, Michael W. Marcellin, Ali Bilgin, Amit Ashok, “Image compression based on task-specific information,” IEEE International Conference on Image Processing (ICIP), pp. 4817-4821 (2014).

Information optimal compressive sensing: static measurement design

The compressive sensing paradigm exploits the inherent sparsity/compressibility of signals to reduce the number of measurements required for reliable reconstruction/recovery. In many applications additional prior information beyond signal sparsity, such as structure in sparsity, is available, and current efforts are mainly limited to exploiting that information exclusively in the signal reconstruction problem. In this work, we describe an information-theoretic framework that incorporates the additional prior information as well as appropriate measurement constraints in the design of compressive measurements. Using a Gaussian binomial mixture prior we design and analyze the performance of optimized projections relative to random projections under two specific design constraints and different operating measurement signal-to-noise ratio (SNR) regimes. We find that the information-optimized designs yield significant, in some cases nearly an order of magnitude, improvements in the reconstruction performance with respect to the random projections. These improvements are especially notable in the low measurement SNR regime where the energy-efficient design of optimized projections is most advantageous. In such cases, the optimized projection design departs significantly from random projections in terms of their incoherence with the representation basis. In fact, we find that the maximizing incoherence of projections with the representation basis is not necessarily optimal in the presence of additional prior information and finite measurement noise/error. We also apply the information-optimized projections to the compressive image formation problem for natural scenes, and the improved visual quality of reconstructed images with respect to random projections and other compressive measurement design affirms the overall effectiveness of the information-theoretic design framework.

For details, see Amit Ashok, Liang-Chih Huang, and Mark A. Neifeld, J. Opt. Soc. Am. A Vol. 30, Issue 5, pp. 831-853 (2013).

Space-time compressive imaging

Compressive imaging systems typically exploit the spatial correlation of the scene to facilitate a lower dimensional measurement relative to a conventional imaging system. In natural time-varying scenes there is a high degree of temporal correlation that may also be exploited to further reduce the number of measurements. In this work we analyze space–time compressive imaging using Karhunen–Loève (KL) projections for the read-noise-limited measurement case. Based on a comprehensive simulation study, we show that a KL-based space–time compressive imager offers higher compression relative to space-only compressive imaging. For a relative noise strength of 10% and reconstruction error of 10%, we find that space–time compressive imaging with 8×8×16 spatiotemporal blocks yields about 292× compression compared to a conventional imager, while space-only compressive imaging provides only 32× compression. Additionally, under high read-noise conditions, a space–time compressive imaging system yields lower reconstruction error than a conventional imaging system due to the multiplexing advantage. We also discuss three electro-optic space-time compressive imaging architecture classes, including charge-domain processing by a smart focal plane array (FPA). Space–time compressive imaging using a smart FPA provides an alternative method to capture the nonredundant portions of time-varying scenes.

For details, see Vicha Treeaporn, Amit Ashok, Mark A. Neifeld, “Space-time Compressive Imaging,” Applied Optics, 51(4), pp. A67-A79 (2012).

Compressive imaging: hybrid measurement basis design

The inherent redundancy in natural scenes forms the basis of compressive imaging where the number of measurements is less than the dimensionality of the scene. The compressed sensing theory has shown that a purely random measurement basis can yield good reconstructions of sparse objects with relatively few measurements. However, additional prior knowledge about object statistics that is typically available is not exploited in the design of the random basis. In this work, we describe a hybrid measurement basis design that exploits the power spectral density statistics of natural scenes to minimize the reconstruction error by employing an optimal combination of a nonrandom basis and a purely random basis. Using simulation studies, we quantify the reconstruction error improvement achievable with the hybrid basis for a diverse set of natural images. We find that the hybrid basis can reduce the reconstruction error up to 77% or equivalently requires fewer measurements to achieve a desired reconstruction error compared to the purely random basis. It is also robust to varying levels of object sparsity and yields as much as 40% lower reconstruction error compared to the random basis in the presence of measurement noise.

For details, see Amit Ashok, Mark A. Neifeld, “Compressive Imaging: Hybrid Measurement Basis Design,” JOSA A, 28(6), pp. 1041-1050 (2011).

Block-wise motion detection using compressive imaging system

A block-wise motion detection strategy based on compressive imaging, also referred to as feature-specific imaging (FSI), is described in this work. A mixture of Gaussian distributions is used to model the background in a scene. Motion is detected in individual object blocks using feature measurements. Gabor, Hadamard binary and random binary features are studied. Performance of motion detection methods using pixel-wise measurements is analyzed and serves as a baseline for comparison with motion detection techniques based on compressive imaging. ROC (Receiver Operation Characteristic) curves and AUC (Area Under Curve) metrics are used to quantify the algorithm performance. Because a FSI system yields a larger measurement SNR(Signal-to-Noise Ratio) than a traditional system, motion detection methods based on the FSI system have better performance. We show that motion detection algorithms using Hadamard and random binary features in a FSI system yields AUC values of 0.978 and 0.969 respectively. The pixel-based methods are only able to achieve a lower AUC value of 0.627.

For details, see Jun Ke, Amit Ashok, Mark A. Neifeld, “Block-wise Motion Detection Using Compressive Imaging System,” Optics Communications, 284(5), pp. 1170-1180 (2011).

Increased field of view through optical multiplexing

Traditional approaches to wide field of view (FoV) imager design usually lead to overly complex optics with high optical mass and/or pan-tilt mechanisms that incur significant mechanical/weight penalties, which limit their applications, especially on mobile platforms such as unmanned aerial vehicles (UAVs). We describe a compact wide FoV imager design based on superposition imaging that employs thin film shutters and multiple beamsplitters to reduce system weight and eliminate mechanical pointing. The performance of the superposition wide FoV imager is quantified using a simulation study and is experimentally demonstrated. Here, a threefold increase in the FoV relative to the narrow FoV imaging optics employed imager design is realized. The performance of a superposition wide FoV imager is analyzed relative to a traditional wide FoV imager and we find that it can offer comparable performance.

For details, see Vicha Treeaporn, Amit Ashok, Mark A. Neifeld, “Increased field of view through optical multiplexing,” Optics Express, 18(21), pp. 22432-22445 (2010).

Object reconstruction from adaptive compressive measurements in feature-specific imaging

Static feature-specific imaging (SFSI), where the measurement basis remains fixed/static during the data measurement process, has been shown to be superior to conventional imaging for reconstruction tasks. Here, we describe an adaptive approach that utilizes past measurements to inform the choice of measurement basis for future measurements in an FSI system, with the goal of maximizing the reconstruction fidelity while employing the fewest measurements. An algorithm to implement this adaptive approach is developed for FSI systems, and the resulting systems are referred to as adaptive FSI (AFSI) systems. A simulation study is used to analyze the performance of the AFSI system for two choices of measurement basis: principal component (PC) and Hadamard. Here, the root mean squared error (RMSE) metric is employed to quantify the reconstruction fidelity. We observe that an AFSI system achieves as much as 30% lower RMSE compared to an SFSI system. The performance improvement of the AFSI systems is verified using an experimental setup employed using a digital micromirror device (DMD) array.

For details, see Jun Ke, Amit Ashok, Mark A. Neifeld, “Object reconstruction from adaptive compressive measurements in feature-specific imaging,” Applied Optics, 49(34), pp. H27-H39 (2010).

Point spread function engineering for iris recognition imaging system design

Undersampling in the detector array degrades the performance of iris-recognition imaging systems. We find that an undersampling of 8×8 reduces the iris-recognition performance by nearly a factor of 4 (on CASIA iris database), as measured by the false rejection ratio (FRR) metric. We employ optical point spread function (PSF) engineering via a Zernike phase mask in conjunction with multiple sub pixel shifted image measurements (frames) to mitigate the effect of undersampling. A task-specific optimization framework is used to engineer the optical PSF and optimize the postprocessing parameters to minimize the FRR. The optimized Zernike phase enhanced lens (ZPEL) imager design with one frame yields an improvement of nearly 33% relative to a thin observation module by bounded optics (TOMBO) imager with one frame. With four frames the optimized ZPEL imager achieves a FRR equal to that of the conventional imager without undersampling. Further, the ZPEL imager design using 16 frames yields a FRR that is actually 15% lower than that obtained with the conventional imager without undersampling.

For details, see Amit Ashok, Mark A. Neifeld, “Point Spread Function Engineering for Iris Recognition Imaging System Design,” Applied Optics, 49(10), pp. B26-B39 (2010). Also appears in Virtual Journal of Biomedical Optics, 5(8), 2010.

Compressive imaging system design using task-specific information

Undersampling in the detector array degrades the performance of iris-recognition imaging systems. We find that an undersampling of 8×88×8 reduces the iris-recognition performance by nearly a factor of 4 (on CASIA iris database), as measured by the false rejection ratio (FRR) metric. We employ optical point spread function (PSF) engineering via a Zernike phase mask in conjunction with multiple sub pixel shifted image measurements (frames) to mitigate the effect of undersampling. A task-specific optimization framework is used to engineer the optical PSF and optimize the postprocessing parameters to minimize the FRR. The optimized Zernike phase enhanced lens (ZPEL) imager design with one frame yields an improvement of nearly 33% relative to a thin observation module by bounded optics (TOMBO) imager with one frame. With four frames the optimized ZPEL imager achieves a FRR equal to that of the conventional imager without undersampling. Further, the ZPEL imager design using 16 frames yields a FRR that is actually 15% lower than that obtained with the conventional imager without undersampling.

For details, see Amit Ashok, Pawan Baheti, Mark A. Neifeld, “Compressive imaging system design using task-specific information,” Applied Optics, 47(25), pp. 4457-4471 (2008).

Task Specific Information for Imaging System Analysis

Imagery is often used to accomplish some computational task. In such cases there are some aspects of the imagery that are relevant to the task and other aspects that are not. In order to quantify the task-specific quality of such imagery, we introduce the concept of task-specific information (TSI). A formal framework for the computation of TSI is described and is applied to three common tasks: target detection, classification, and localization. We demonstrate the utility of TSI as a metric for evaluating the performance of three imaging systems: ideal geometric, diffraction-limited, and projective. The TSI results obtained from the simulation study quantify the degradation in the task-specific performance with optical blur. We also demonstrate that projective imagers can provide higher TSI than conventional imagers at small signal-to-noise ratios.

For details, see Mark A. Neifeld, Amit Ashok, Pawan Baheti, “Task Specific Information for Imaging System Analysis,” JOSA A, 24(12), pp. B25-B41 (2007).

Pseudorandom phase masks for super-resolution imaging from sub-pixel shifting

We present a method for overcoming the pixel-limited resolution of digital imagers. Our method combines optical point-spread function engineering with subpixel image shifting. We place an optimized pseudorandom phase mask in the aperture stop of a conventional imager and demonstrate the improved performance that can be achieved by combining multiple subpixel shifted images. Simulation results show that the pseudorandom phase-enhanced lens (PRPEL) imager achieves as much as 50% resolution improvement over a conventional multiframe imager. The PRPEL imager also enhances reconstruction root-mean-squared error by as much as 20%. We present experimental results that validate the predicted PRPEL imager performance.

For details, see Amit Ashok, Mark A. Neifeld, “Pseudo-random phase masks for super-resolution imaging from sub-pixel shifting,” Applied Optics, 46(12), pp. 2256-2268 (2007).

Information-based analysis of simple incoherent imaging systems

We present an information-based analysis of three candidate imagers: a conventional lens system, a cubic phase mask system, and a random phase mask system. For source volumes comprising relatively few equal-intensity point sources we compare both the axial and lateral information content of detector intensity measurements. We include the effect of additive white Gaussian noise. Single and distributed aperture imaging is studied. A single detector in each of two apertures using conventional lenses can yield 36% of the available scene information when the source volume contains only single point source. The addition of cubic phase masks yields nearly 74% of the scene information. An identical configuration using random phase masks offers the best performance with 89% scene information available in the detector intensity measurements.

For details, see Amit Ashok, Mark A. Neifeld, “Information-based analysis of simple incoherent imaging systems,” Optics Express, 11(8), pp. 2153-2162 (2003).