Hybrid-SCAPE

With the ever-increasing scope and volume of data available for exploration and the increasing complexity of tasks, many of which require a team of experts working together, there is great need for 3D visualization systems that are capable of displaying a complex dataset in ways that facilitate information discovery and collaborative activities. While 3D displays are key enabling technologies to a fully-integrated, 3D interactive system, visualization methods and interaction techniques are essential components that have a critical impact on the effectiveness of the system for complex tasks. Visualization methods support users to correlate and interpret complex datasets by exploring alternative perspectives, scales, resolution, and temporal dynamics. Interaction techniques allow users to manipulate digital objects, perform system control, and add or modify digital information. However, most of the existing 3D visualization methods are incapable of adaptively visualizing data at appropriate levels of complexity according to a user’s task needs. The interaction techniques based on conventional interface devices such as joysticks and 3D mice lack intuitiveness and ease of use. Moreover, few existing visualization systems support and mediate fluid collaborative activities.

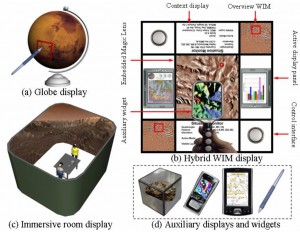

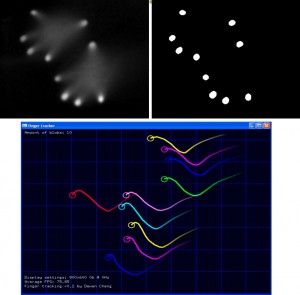

To respond to these challenges, under a recent NSF CAREER award (Years 2007-2012), we have been developing a heterogeneous display environment that contains a large-scale immersive environment, a 2D/3D hybrid display in which a 3D display area is surrounded by a high resolution 2D tabletop display, and various other 2D and 3D hand-held displays. The system is and will be integrated with an array of 2D and 3D user interaction techniques such as multi-touch sensing, hand gesture recognition, and other advanced user interfaces. From the display perspective, this platform assists users in navigating and controlling their levels of immersion into the digital realm. From the interaction perspective, the platform supports intuitive user interaction with both the physical and digital worlds through physical manipulation and gesture-based interaction metaphors. Furthermore, this platform provides a unique display environment for studying visualization and interaction techniques that are potentially capable of facilitating users’ ability to correlate and understand complex datasets as well as to support collaborative tasks. For instance, we recently evaluated the effect of three multi-scale interfaces in large scale information visualization. The comparison study is helpful to understand how information window arrangements and methods to position information relative to a viewer affect the user’s ability to gather information and interpret spatial relationships.

This project is based on another earlier project: SCAPE

Design of a hybrid-WIM workbench display

Multi-Touch User Interface